AI-centric cloud platform ready for intensive workloads

Get NVIDIA H100 from $2.12 per hour*. A training-ready platform with competitive pricing and a wide range of NVIDIA® Tensor Core GPUs: H100, A100, L40S and V100.

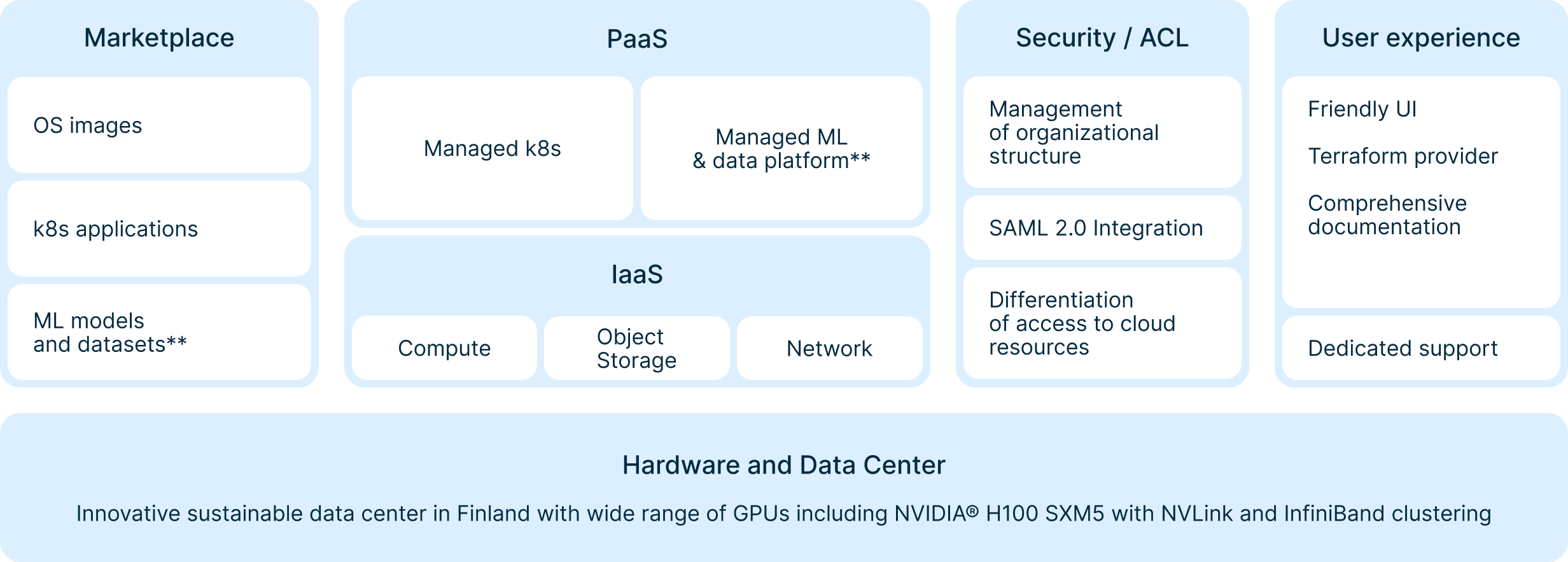

Built for large-scale ML workloads

Get the most out of multihost training on thousands of H100 GPUs of full mesh connection with latest InfiniBand network up to 3.2Tb/s per host.

Onboarding assistance

We guarantee a dedicated engineer support to ensure seamless platform adoption. Get your infrastructure optimized and k8s deployed.

Fully managed Kubernetes & Slurm

Simplify the deployment, scaling and management of ML frameworks with Managed Kubernetes or opt for Slurm for efficient, large-scale job scheduling.

Marketplace with ML frameworks

Explore our Marketplace with its ML-focused libraries, applications, frameworks and tools to streamline your model training.

Easy to use

Enjoy our platform UX: detailed documentation, resources management in our user-friendly cloud console, CLI or Terraform, VM access via SSH.

Join the GPU auction and save on NVIDIA® H100 GPU hours

Secure the best price on top NVIDIA Tensor Core GPUs with 3.2 Tbit/s InfiniBand! Just make a bid in our user-friendly console and get your cluster.

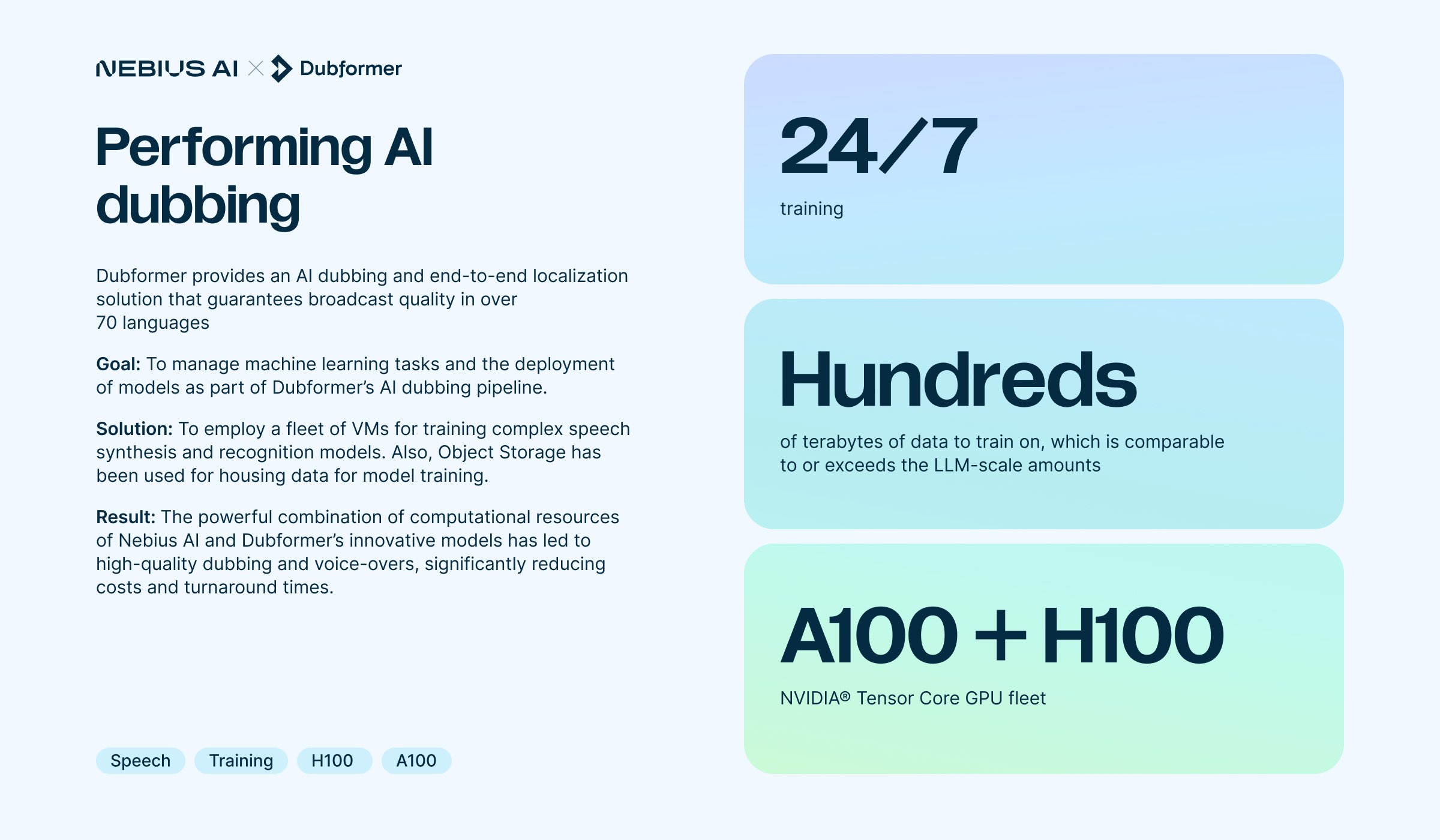

Get a training-ready platform, not just a GPU cloud

Get a training-ready platform, not just a GPU cloud

Walk around our state-of-the-art data center in Finland

Walk around our state-of-the-art data center in Finland

We filmed this video 60 kilometers from Helsinki, the home of the Nebius data center. This is where we built ISEG, the #19 most powerful supercomputer in the world. And there’s more: recently, we also constructed a supercluster of 8,000 GPUs installed into servers and racks of our own design.

With Nebius, we’re able to efficiently utilize clusters of L40S GPUs for NOVA-1's video inference for businesses. It is incredibly efficient — we see 40% cost efficiency gains with L40S without sacrificing content quality or video generation speed.

Our consumer-targeted model was initially trained on Nebius infrastructure, and now hundreds of thousands of users are generating personalized videos on the Diffuse app, which is pioneering AI-powered social media content creation on mobile devices.

Alex Mashrabov, Co-founder and CEO at Higgsfield AI

Powered by NVIDIA, world’s leading GPU manufacturer

Powered by NVIDIA, world’s leading GPU manufacturer

As an NVIDIA preferred cloud service provider, we offer access to the NGC Catalog with GPU-accelerated software that speeds up end-to-end workflows with performance-optimized containers, pre-trained ML models, and industry-specific SDKs that can be deployed in the cloud.

Start training and scaling your ML model today

Explore Nebius AI

✻ The price is valid for a 3-year reserve with 50% prepayment.

✻✻ Roadmap 2024

✻✻✻ Compared to Amazon Web Services and Oracle Cloud Infrastructure.

The provided information and prices do not constitute an offer or invitation to make offers or invitation to buy, sell or otherwise use any services, products and/or resources referred to on this website and may be changed by Nebius at any time. Contact sales to get a personalized offer.

All prices are shown without any applicable taxes, including VAT.