GPU clusters with InfiniBand

InfiniBand is a technology that facilitates the seamless connection of servers equipped with GPUs within a cluster. Use GPU clusters with InfiniBand interconnect to accelerate your data-intensive ML workloads.

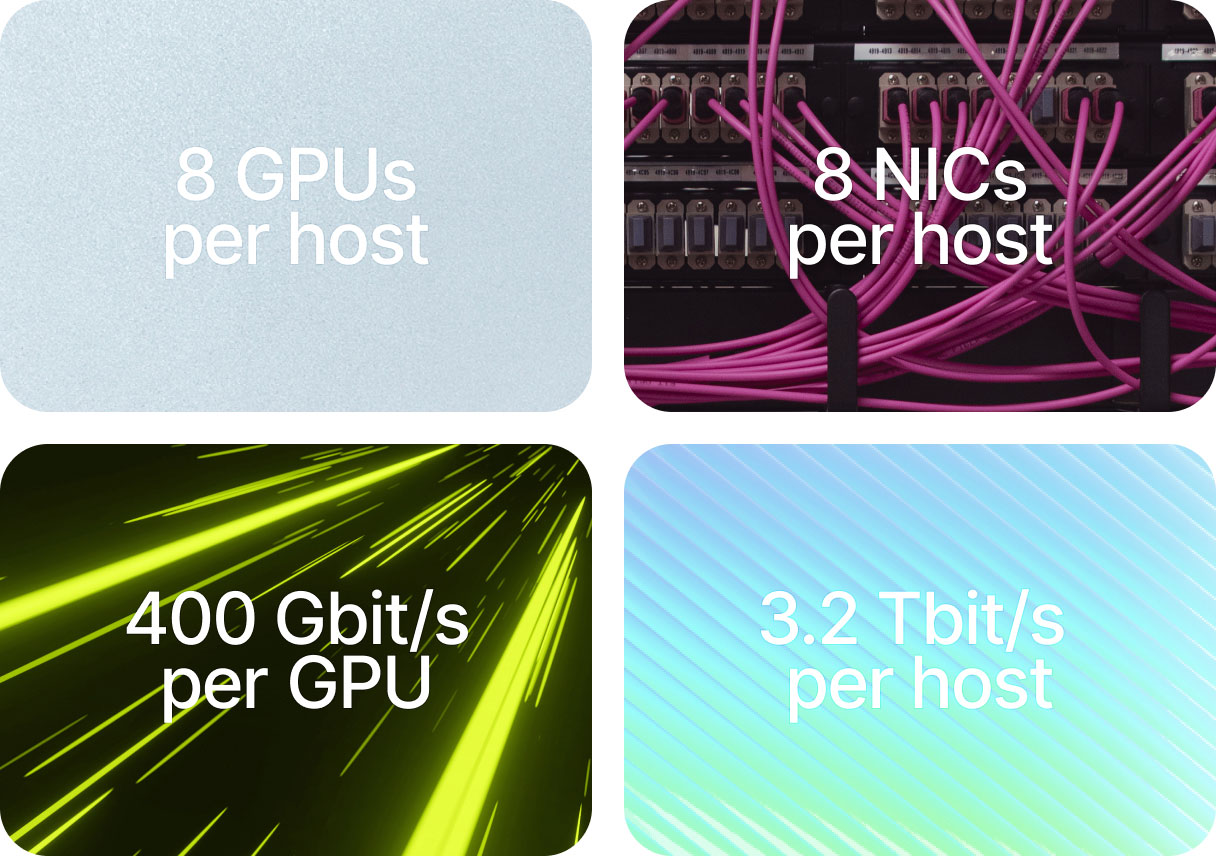

Get a multi-node infrastructure with up to 3.2 Tbit/s of per-host networking performance.

High-speed data transfer

InfiniBand provides the ultra-high data transfer speeds essential for ML workflows that require rapid processing and analysis of large volumes of data.

Scalability

Large-scale clustering and parallel processing make InfiniBand indispensable for complex and resource-intensive algorithms.

Reliability

High reliability and fault tolerance provided by InfiniBand ensure that ML workflows run smoothly with minimal downtime.

Low latency

InfiniBand offers low latency communication required by real-time ML applications with quick decision-making and response times.

Cost-effectiveness

InfiniBand can be a cost-effective solution for engineers who need high-performance networking capabilities without investing in expensive proprietary hardware.

Training-optimized configuration

Training-optimized configuration

We provide GPU clusters with NVIDIA® Hopper® H100 SXM GPUs.

A host consists of 8 GPUs. Each GPU features up to 400 Gbit/s connection, providing up to 3.2 Tbit/s network bandwidth per host.

You can use Marketplace images adapted for GPU clusters, e.g. Ubuntu 22.04 LTS for NVIDIA® GPU clusters (CUDA® 12).

Why InfiniBand is essential for ML workloads

Model parallelism

This innovative approach harnesses the formidable power of InfiniBand, enabling the seamless training of expansive ML models across the entire cluster. This is an exceptional strategy for training transformer models with an extensive array of parameters.

Data parallelism

To expedite the training process, you can part your data into segments and leverage multiple GPU nodes for concurrent, parallelized training. This approach significantly reduces the overall training time by distributing the workload evenly across the available resources.

Hardware efficiency

Hardware efficiency

We understand the importance of using hardware as efficiently as possible. That’s why we design and assemble servers specifically tailored for hosting modern GPUs like the NVIDIA H100.

Our latest server rack generation presents node solutions tuned for ML training and inference.

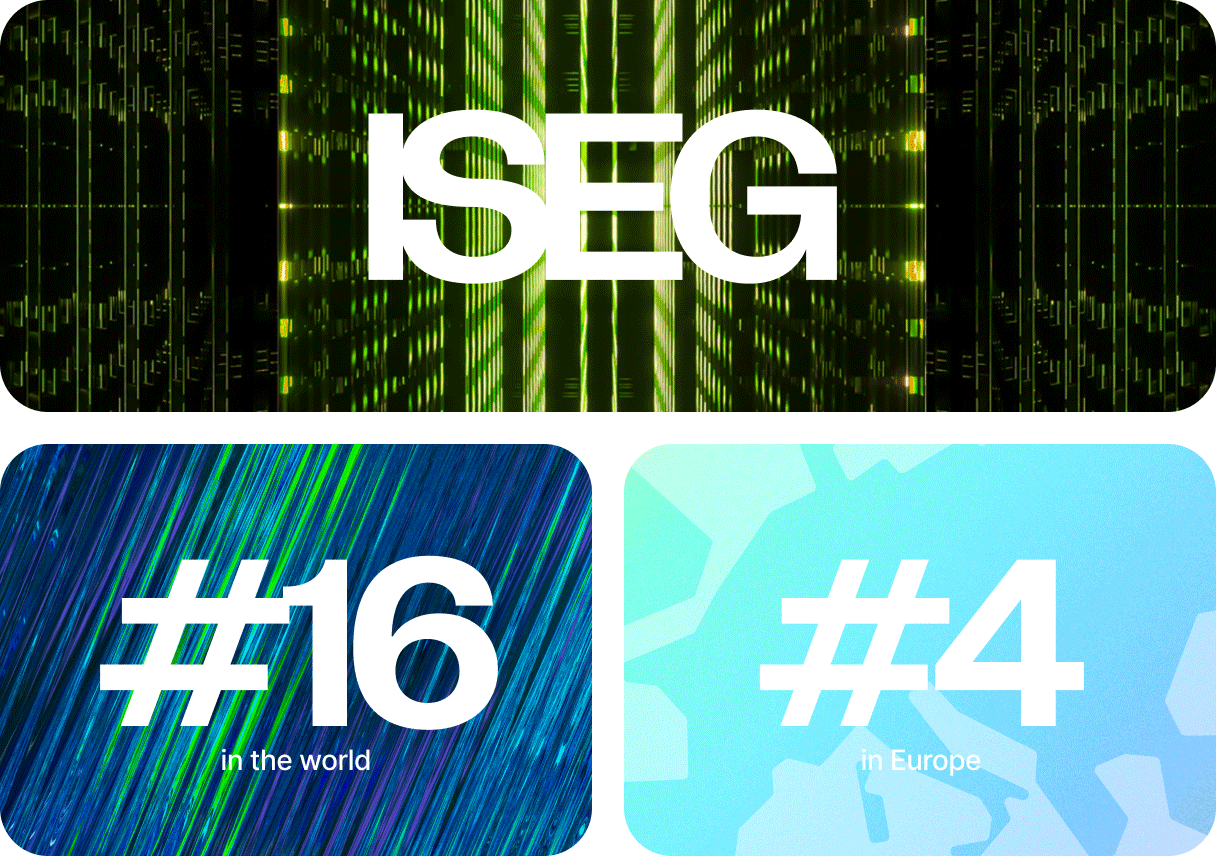

How we built ISEG, a top-16 supercomputer in the world

How we built ISEG, a top-16 supercomputer in the world

We weren’t aiming to create a supercomputer. Yet our R&D team decided to test a part of the platform which was free of customers’ workloads at that moment. For that, they used a benchmark from the Top 500.

ISEG is now 16th in the world’s ranking — and 4th in Europe.