Sign up and get instant access to V100 GPUs

A single NVIDIA V100 Tensor Core GPU offers the performance of dozens of CPUs, but only costs as much as two. Register now to access V100 GPUs at Nebius AI!

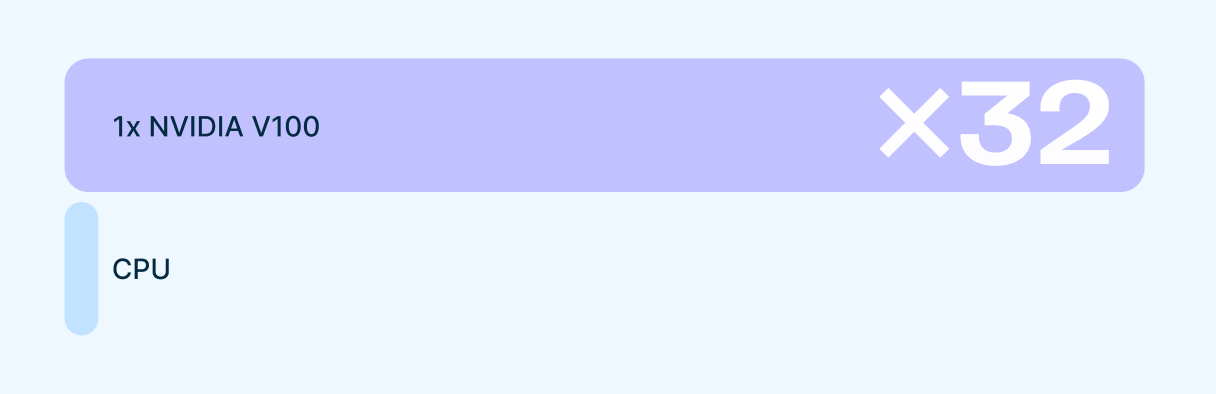

V100 vs CPU: performance comparison

According to an NVIDIA research, V100 showcases a significantly more powerful performance in training and inference compared to non-GPU configurations.

Training

32x faster training throughput than a CPU

Inference

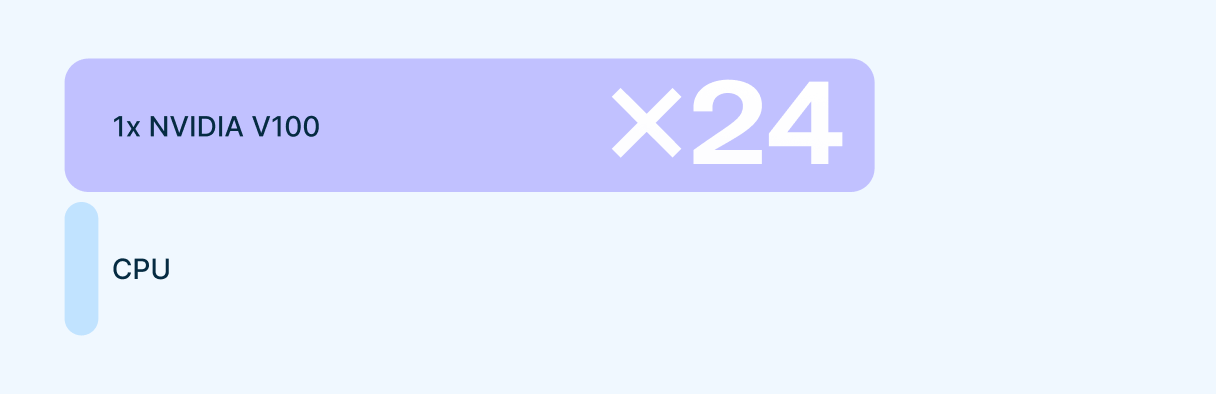

24x higher inference throughput than a CPU server

Preemptible V100

Preemptible V100

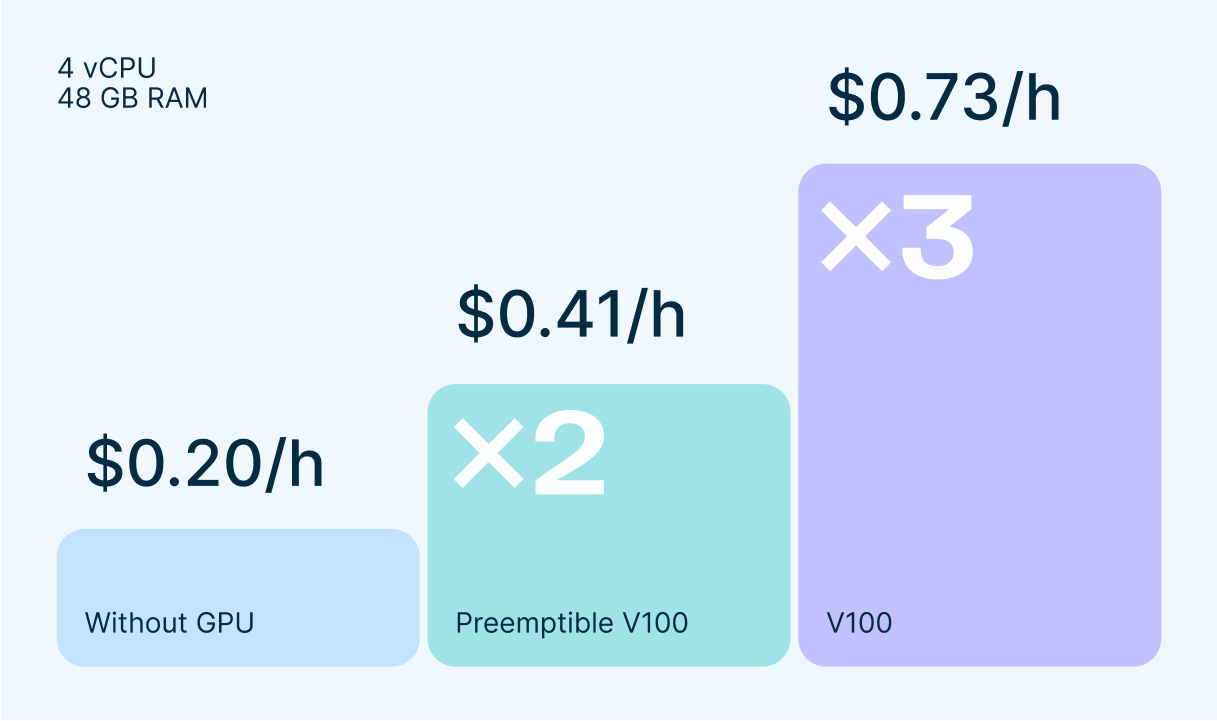

With this outstanding performance compared to CPUs, V100 in the minimal preemptible configuration only costs as little as two CPUs, and as little as three CPUs in non-preemptible.

Use them for tasks that can be interrupted and resumed, and for workloads that are not time-sensitive.

V100 vs other GPUs

V100 vs other GPUs

According to an in-depth GPU performance comparison by Nebius AI, V100 is the go-to choice for fine-tuning and inference with FP16/FP32 precision.

The minimal configuration costs 17.52 USD/hour, making V100 the most affordable option. With our flexible pricing policy, you can be sure to choose the optimal option for your needs.

Check out our pricing calculator to estimate the cost of your required configuration.

Sign up and get access to V100 right away

Consider NVIDIA V100 for dealing with small and middle-size models that do not require BF16 precision support.

V100 is much more affordable than other types of GPU, which can significantly reduce your costs for compute.