Nebius AI is among the first cloud providers adopting NVIDIA B200 Tensor Core GPUs

With a keen eye on power usage effectiveness, Nebius AI is excited to be among the first cloud providers adopting NVIDIA® B200 Tensor Core GPUs and offering the advanced, energy-efficient technology to customers.

The new NVIDIA Blackwell platform for accelerated computing — a groundbreaking technology for generative AI — enables AI practitioners to efficiently build and run real-time inference on trillion-parameter large language models (LLMs).

Blackwell Tensor Cores and the NVIDIA TensorRT-LLM

What is so unique about Blackwell?

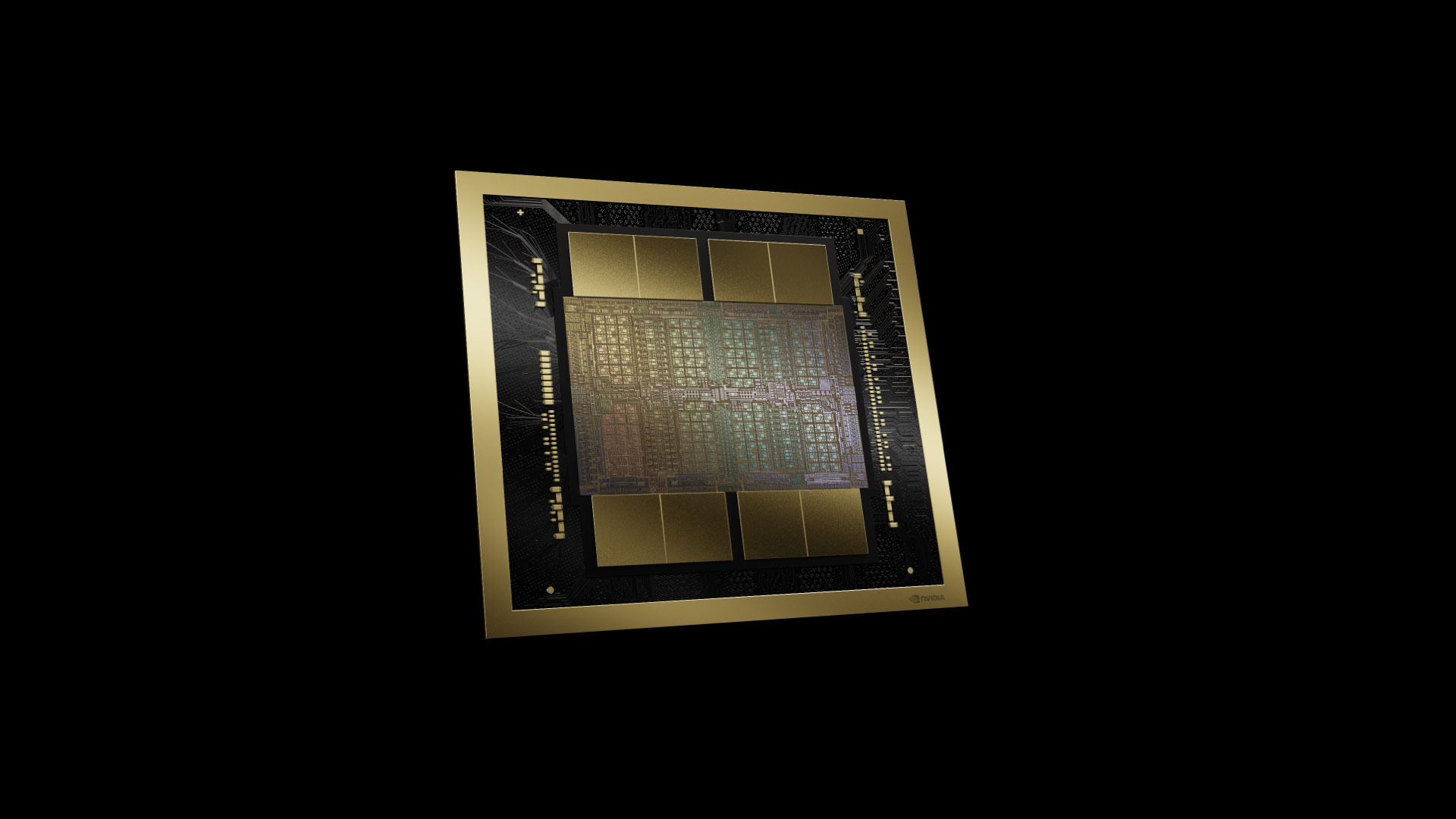

There are six revolutionary technologies inside the new architecture. Along with being the basis of the world’s most powerful chip, which is packed with 208 billion transistors, Blackwell includes a second-generation Transformer Engine, fifth-generation NVLink interconnect, advanced confidential computing capabilities, a dedicated compression engine, and a RAS Engine that adds preventative maintenance, diagnostics and reliability-forecasting capabilities at the chip level.

Additionally, the Blackwell platform adds capabilities at the chip level to utilise AI-based preventative maintenance to run diagnostics and forecast reliability issues. This maximises system uptime and improves resiliency for massive-scale AI deployments to run uninterrupted for weeks or even months at a time and to reduce operating costs.

NVIDIA B200 Tensor Core GPU

Based on the Blackwell platform, the NVIDIA B200 Tensor Core GPU delivers a massive leap forward in speeding up inference workloads, making real-time performance a possibility for resource-intensive, multitrillion-parameter language models.

For the highest AI performance, the NVIDIA GB200 Grace Blackwell-powered systems can be connected with the NVIDIA Quantum-X800 InfiniBand and Spectrum™-X800 Ethernet platforms, also announced today

To help accelerate development of Blackwell-based servers from its partner network, NVIDIA HGX B200, a server board that links eight B200 GPUs through high-speed interconnects, allows developers to build the world’s most powerful x86 generative AI platforms. HGX B200 supports networking speeds up to 400 Gbit/s through the NVIDIA Quantum-2 InfiniBand and NVIDIA Spectrum-X Ethernet platforms, along with support for NVIDIA BlueField-3 DPUs.

We anticipate that NVIDIA Blackwell-based products will be available from partners starting later this year.