Train your AI model on NVIDIA® H200 GPU cluster with InfiniBand

This fall, we’re adding H200 SXM GPUs to Nebius AI.

Reserve your cluster right away!

H200: the most powerful GPU for your AI and HPC

Prices for H200

$4.85 GPU/h

Price per 1 GPU on pay-as-you-go basis. No commitments. Available in self-service mode.

$2.50 GPU/h

Price per 1 GPU, valid for a 3-year reserve commitment on a minimum of 64 GPUs.

Personal offer

Get the best offer tailored to your AI project.

H200 vs H100

H200 vs H100

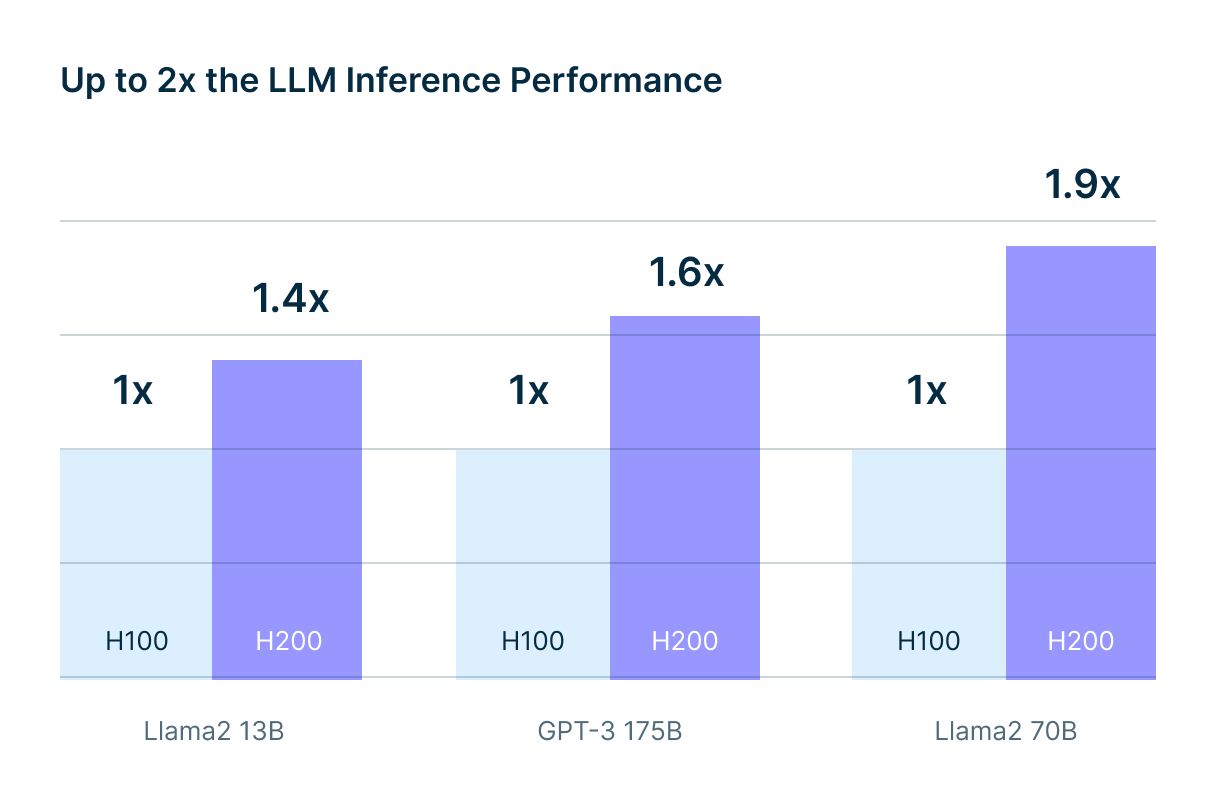

According to an NVIDIA research, H200 shows next-level performance compared to H100 for popular LLMs: Llama2 13B, Llama2 70B and GPT-3 175B.

H200 SXM offers significant enhancements over the H100 SXM, delivering up to 45% better performance in generative AI and HPC tasks.

GPU clusters with InfiniBand

InfiniBand is a technology that facilitates seamless connection of servers equipped with GPUs within a cluster. Leverage multi-node interconnection with up to 3.2 Tbit/s.

Reserve H200 right now

H200 Tensor Core GPU revolutionizes generative AI and high-performance computing workloads with exceptional performance and advanced memory capabilities.

H200 is already available at Nebius AI!